Nikhil Prakash

22nd floor, 177 Huntington Ave

Boston, MA 02115

I’m a fourth-year Ph.D. student at Northeastern University, advised by Prof. David Bau. I completed my Bachelor of Engineering from RV College of Engineering, Bangalore, India in fall 2020, with a focus on electrical and computer science.

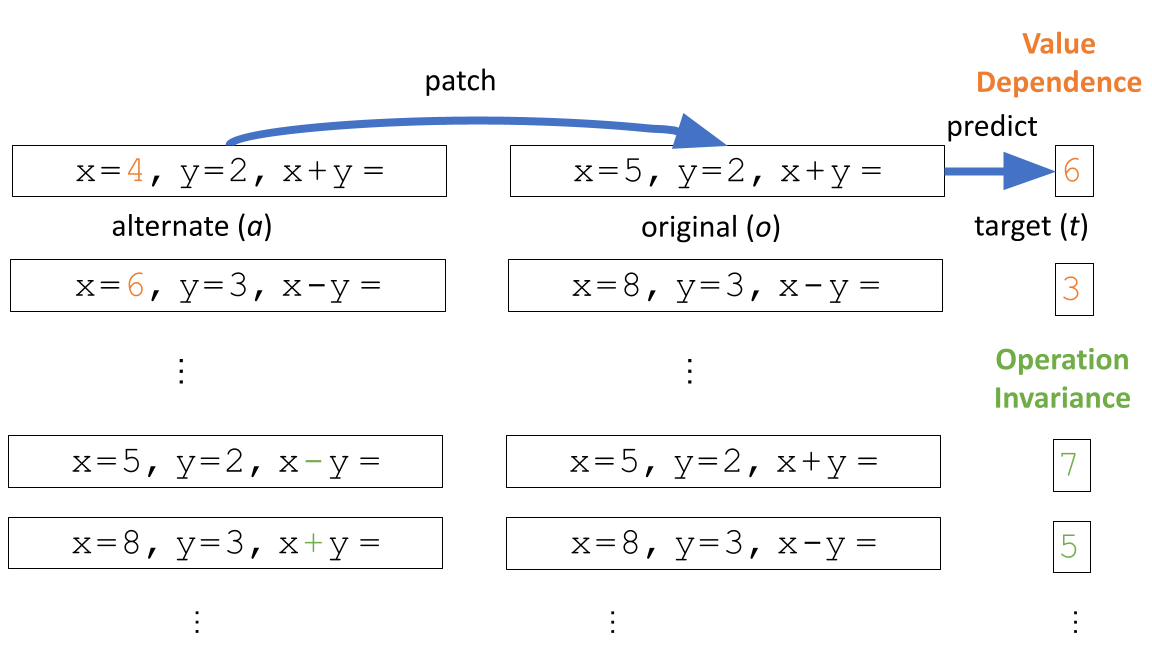

Last summer, I’m interned at Apple in the AIML Visualization team to work on mechanistic interpretability of math reasoining in LLMs. Previously, I’ve interned at Practical AI Alignment and Interpretability Research Group with Dr. Atticus Geiger and SERI-MATS (first phrase) with Neel Nanda. Prior to that, I worked as a visiting scholar at the Max Planck Institute for Security and Privacy, and had stints at Korea Advanced Institute of Science & Technology and Indian Institute of Technology Ropar.

Broadly, my interest lies in understanding the internal mechanisms of deep neural networks, with a current focus on cognitive abilities such as in-context reasoning and theory of mind, as well as exploring their downstream applications.

I have received invaluable support from many people throughout my career, and as a result, I’m always happy to assist others and share insights from my experiences. Please feel free to reach out.

news

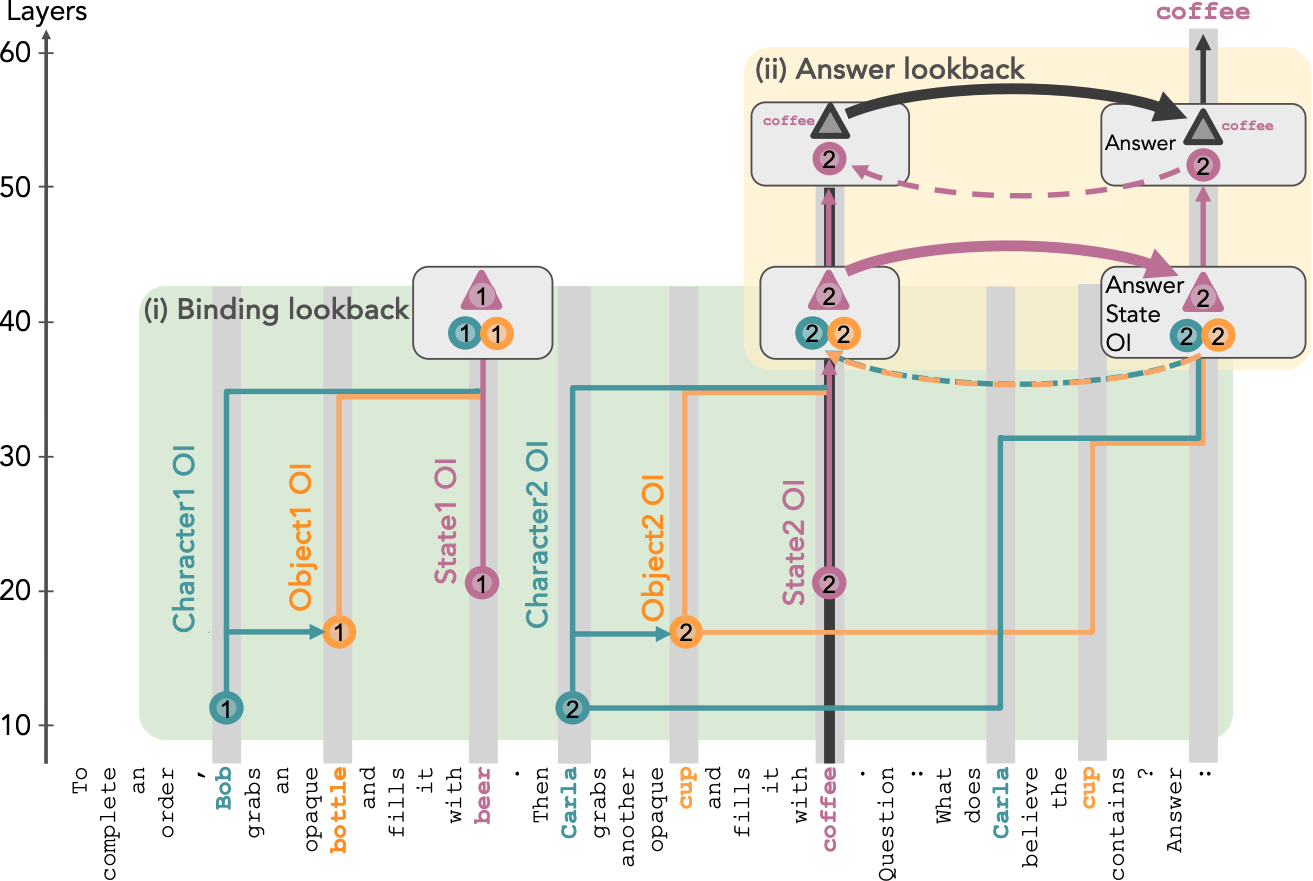

| Jan, 2026 | Our lookback paper got accepted at ICLR 2026. See you in Rio🇧🇷! |

|---|---|

| Jan, 2026 | Excited to be TAing David’s Neural Mechanics course! |

| Jan, 2026 | Reviewing for ICML 2026. |

| Dec, 2025 | My Apple internship work, Constructive Circuit Amplification, is now on arXiv. |

| Dec, 2025 | Excited to attend NeurIPS to present our Lookback paper at Mech Interp and CogInterp workshops. |

| Oct, 2025 | Invited to give a talk at Boston University NLP Group. |

| Oct, 2025 | Excited to attend COLM 2025 in Montreal! |

| Sep, 2025 | Gave a talk in Algorithms and Behavioral Science Coffee Seminar hosted by MIT Economics. |

| Aug, 2025 | Attended and presented my work at the 2nd New England Mechanistic Interpretability (NEMI) workshop. |

| Aug, 2025 | Last day of Apple Internship at Apple Park! |

| Jun, 2025 | Invited to give a talk at ploutos.dev on our recent belief tracking paper. |

| May, 2025 | Started my Apple internship with the AIML Visualization team! |

| Apr, 2025 | Oral Presentation of our recent work on Belief Tracking Mechanism in LMs at New England NLP 2025. |

| Mar, 2025 | Reviewing for ICML 2025, COLM 2025, TMLR. |

| Mar, 2025 | PhD Candidacy Achieved! |

| Mar, 2025 | Accepted research internship offer from Apple. |

| Jan, 2025 |

Our paper NNsight and NDIF: Democratizing Access to Foundation Model Internals got accepted to ICLR 2025! |

| Nov, 2024 | Received a complimentary NeurIPS 2024 registration for my service as a reviewer. |

| Aug, 2024 | Reviewing for ICLR 2025. |

| Jul, 2024 | Our paper NNsight and NDIF: Democratizing Access to Foundation Model Internals is on ArXiv! |

| Jul, 2024 | Interning at Practical AI Alignment and Interpretability Research Group with Dr. Atticus Geiger. |

| Jun, 2024 | Reviewing for NeurIPS 2024 (main conference and workshop proposals). |

| May, 2024 | Invited talk at Practical AI Alignment and Interpretability Research Group. |

| May, 2024 | Invited talk at Computational Linguistics and Complex Social Networks in Indian Institute of Technology Gandhinagar. |

| May, 2024 |

Attending ICLR 2024 at Vienna in-person |

| Apr, 2024 | Invited talk at New England NLP 2024. |

| Apr, 2024 | Co-organizing Mechanistic Interpretability Social at ICLR 2024 with Gabriele Sarti. |

| Apr, 2024 |

Awarded Google's Gemma Academic Program |

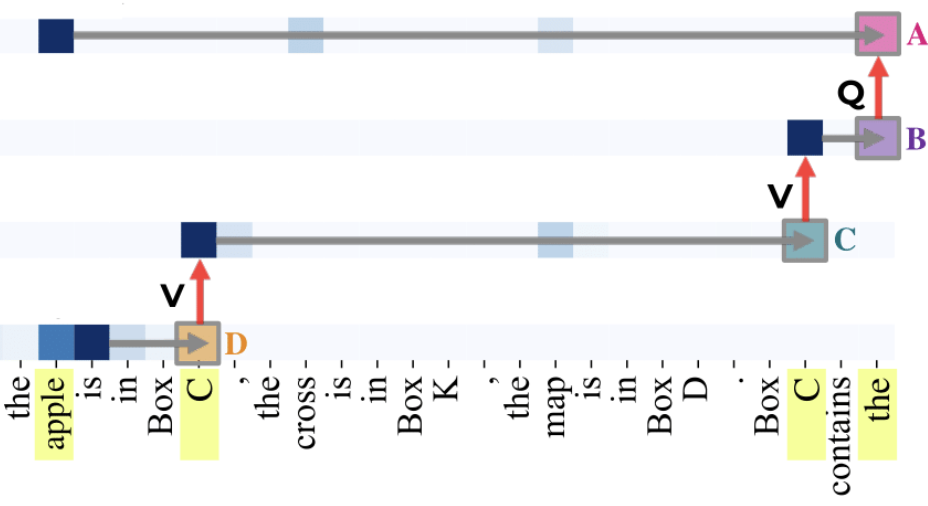

| Jan, 2024 | Our paper “Fine-Tuning Enhances Existing Mechanisms: A Case Study on Entity Tracking” got accepted at ICLR 2024! |

| Oct, 2023 | Served as a reviewer for ATTRIB 2023 workshop @ NeurIPS. |

| Jul, 2023 | Our short paper got accepted at Challenges of Deploying Generative AI workshop at ICML 2023! |

| Jul, 2023 | Participated in Stanford Existential Risks Initiative ML Alignment Theory Scholars (SERI-MATS) 2023. |

| Jun, 2023 | Participated in Alignment Research Engineer Accelerator (ARENA) 2023. |

| Feb, 2023 | Our paper got acceptetd at IUI 23! |

| Sep, 2022 |

|